# plot NDVI

plt.imshow(np.squeeze(ndvi))

plt.colorbar()<matplotlib.colorbar.Colorbar at 0x142c6c50850>

This project aims to identify different vegetation types using the concept of Linear Spectral Unmixing. We use a UAV orthoimage of a study area in the Peruvian Amazon forest. The image was clipped for testing purposes and contains only a part of the existing biodiversity.

The entire project is based on the assumption that, for example, Sentinel-2 imagery with a spatial resolution of 10 m cannot capture all biodiversity because we will always have the problem of mixed pixels, i.e., each pixel is not "pure" but consists of different "pure" materials.

Therefore, an attempt was made here to identify different vegetation types in the UAV data that we assume to be "pure" material. This information is then applied to the Sentinel-2 image to determine how much of these different vegetation types can be found in a sentinel pixel.

from unmixing.utils import as_array

from unmixing.utils import pixel_to_xy

from unmixing.lsma import ravel_and_filter

from unmixing.transform import mnf_rotation

from unmixing.utils import array_to_raster, dump_raster

from unmixing.visualize import FeatureSpace

from unmixing.transform import mnf_rotation

from unmixing.lsma import convex_hull_graham

from unmixing.utils import spectra_at_xy

from unmixing.lsma import FCLSAbundanceMapper

from unmixing.lsma import endmembers_by_maximum_volume

import json

import pysptools.eea as sp_extract

import numpy as np

import pysptools.util as sp_utils

from matplotlib import pyplot as plt, cm

%matplotlib inline

from osgeo import gdalIn the first steps, we explore the data and get rid of all other surface types that are not vegetation and perform a noise and dimensionality reduction.

# load UAV image as 3D NumPy array

image, gt, wkt = as_array('C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/data/test.tif')GT returns the corner coordinates, pixel size, etc. of the raster

gt(684544.7354229867,

0.07000869820171894,

0.0,

9572983.114587901,

0.0,

-0.07000870104953134)WKT is the Well-Known Text string describing the projection of the raster.

wkt'PROJCS["WGS 84 / UTM zone 18S",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Transverse_Mercator"],PARAMETER["latitude_of_origin",0],PARAMETER["central_meridian",-75],PARAMETER["scale_factor",0.9996],PARAMETER["false_easting",500000],PARAMETER["false_northing",10000000],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","32718"]]'# Check the no data value of the image

# file = 'C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/data/test.tif'

# test0 = gdal.Open(file)

# band = test0.GetRasterBand(1)

# band.GetNoDataValue()0.0# Create new array that contains boolean of locations where image has no data values

img_na = np.any(image == 0.0, axis = 0)img_na.shape(3689, 6414)# copy image to new array and set all zeros to 9999, because otherwise NDVI cannot be calculated

arrImg = np.copy(image)

arrImg[:, img_na == True] = 9999Here we use 9999 becasue otherwise the normalization would throw an error.

# Convert array to gdal.Dataset (raster), dump to file

#dump_raster(array_to_raster(arrImg, gt, wkt), 'C:/Users/Henrike/SoftwareDev_Final/test_NoData_v3.tiff', nodata = np.NaN)# create function to normalize image values between 0 and 1

def NormalizeData(data):

return (data - np.min(data)) / (np.max(data) - np.min(data))# apply function to image

scaled_image = NormalizeData(arrImg)

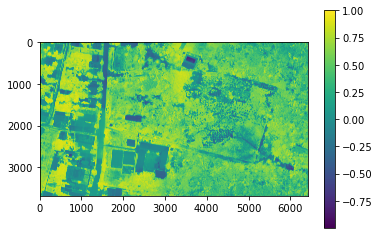

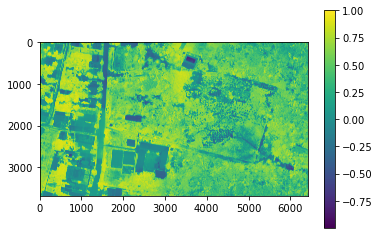

scaled_image.shape(5, 3689, 6414)In order to remove all other surface types that are not vegetation, we calculate the NDVI and set a threshold based on visual inspection in QGIS.

# Calculate NDVI

red = scaled_image[2]

nir = scaled_image[4]

ndvi = np.divide((nir - red), (nir + red))#dump_raster(array_to_raster(ndvi, gt, wkt), 'C:/Users/Henrike/SoftwareDev_Final/NDVI.tiff', nodata = np.NaN)# plot NDVI

plt.imshow(np.squeeze(ndvi))

plt.colorbar()<matplotlib.colorbar.Colorbar at 0x142c6c50850>

# Create classes and apply to NDVI results

ndvi_mask = np.where(ndvi > 0.4, ndvi, -9999)

ndvi_mask.shape(3689, 6414)# Apply mask to scaled image

scaledImg_masked = np.copy(scaled_image)

scaledImg_masked[:, ndvi_mask == -9999] = -9999

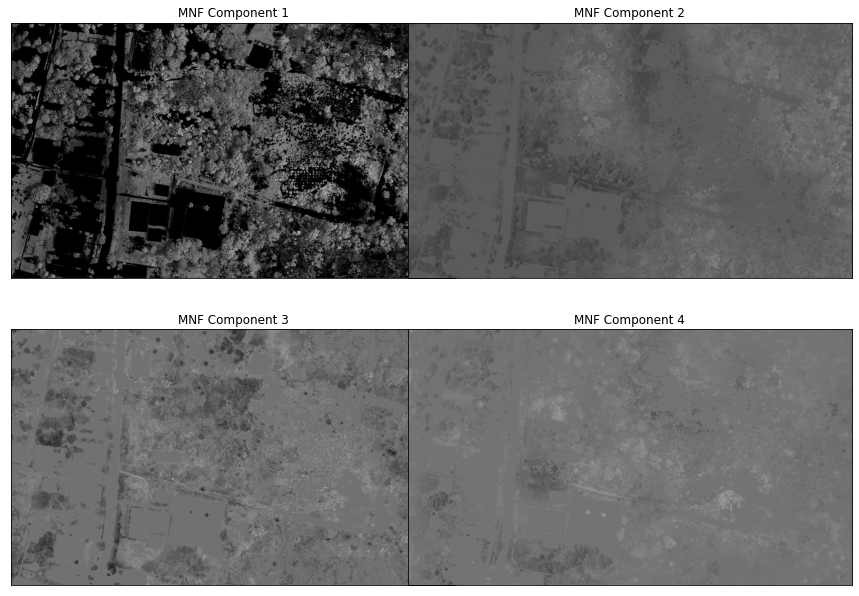

scaledImg_masked.shape(5, 3689, 6414)#dump_raster(array_to_raster(scaledImg_masked, gt, wkt), 'C:/Users/Henrike/SoftwareDev_Final/test_NDVI_masked.tiff', nodata = np.NaN)To reduce the noise and dimensionality of the UAV image, a minimum noise fraction (MNF) transformation is applied to the raster.

For further information, refer to https://github.com/arthur-e/unmixing/blob/master/docs/Overview_LSMA_in_Python.ipynb

hsi_post_mnf = mnf_rotation(scaledImg_masked)hsi_post_mnf.shape(6414, 3689, 5)hsi_post_mnf.T.shape(5, 3689, 6414)#dump_raster(array_to_raster(hsi_post_mnf.T, gt, wkt), 'C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/output/test_MNFRotation.tiff', nodata = -9999)The plot shows that the first component explains almost all the variance. This can also be seen in the following plot.

fig = plt.figure(figsize = (16, 16))

fig.subplots_adjust(wspace = -0.2)

for i in range(1, 5):

ax = fig.add_subplot(320 + i, title = 'MNF Component %d' % i)

ax.imshow(hsi_post_mnf.T[i - 1,:,:], cmap = cm.gray)

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

Spectral unmixing is based on the assumption that each pixel can be represented as a (linear) combination of the "pure" material in an image, i.e. each pixel is a mixture of different "pure" signals. Usually, the endmembers are collected in the field, e.g., with an ASD field spectroradiometer, to determine the spectral reflectance of the pure material. However, this is costly and time-consuming, so attempts are made to obtain endmembers from the image itself. Due to the fact that the study area has a fairly diverse range of species, we attempt to identify endmembers from the UAV data because the resolution is much better.

The Pixel Purity Index (PPI), for example, creates several random projections of the given feature space and then counts how many times each pixel appears in the corners (so in the extremes) across the respective projections.

Instead of using the PPI, here we the Fast Iterative Puritz Index (FIPPI) is used. It has several advantages over the PPI, e.g. instead of using randomly generated vectors as initial endmembers, the FIPPI produces an intial set of endmembers to speed up the process and iteratively tries to find the optimal set of potential endmembers.

Further information can be found here: Chang, C.-I. (2006).

fippi = sp_extract.FIPPI()

# find 3 potential endmembers and set maximum iteration to 5000

iter_members = fippi.extract(hsi_post_mnf[...,0:3], 3, maxit = 5000)fippi.get_idx()

# Switch pixel coordinates from (p, m, n) to (n, m, p)

coords = [(y, x) for x, y in fippi.get_idx()]

pixel_to_xy(coords, gt = gt, wkt = wkt, dd = True)[(-3.861669012382913, -73.33802684504938),

(-3.862376720033442, -73.33701193223709),

(-3.863029163231802, -73.33654801527393),

(-3.8627835156699404, -73.33654471191169),

(-3.8630348016130243, -73.33651774948143),

(-3.863964042293433, -73.33515699294598),

(-3.8621155427946365, -73.3349053222192)]# Convert array to gdal.Dataset (raster), dump to file

dump_raster(array_to_raster(scaledImg_masked, gt, wkt), 'C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/output/test_arrayImage.tiff', nodata = -9999)

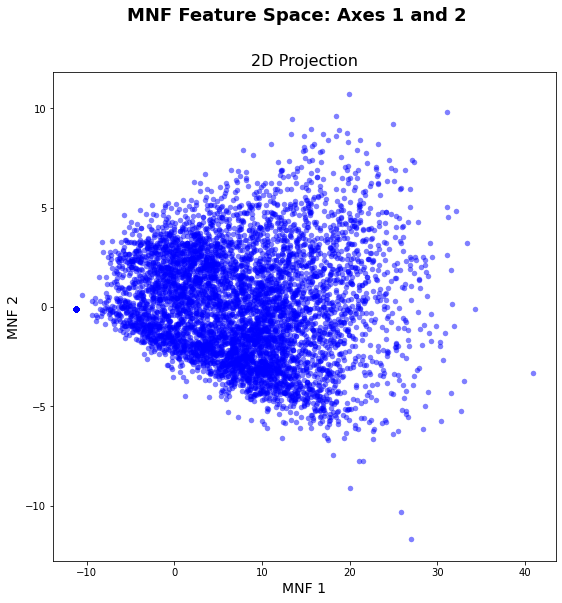

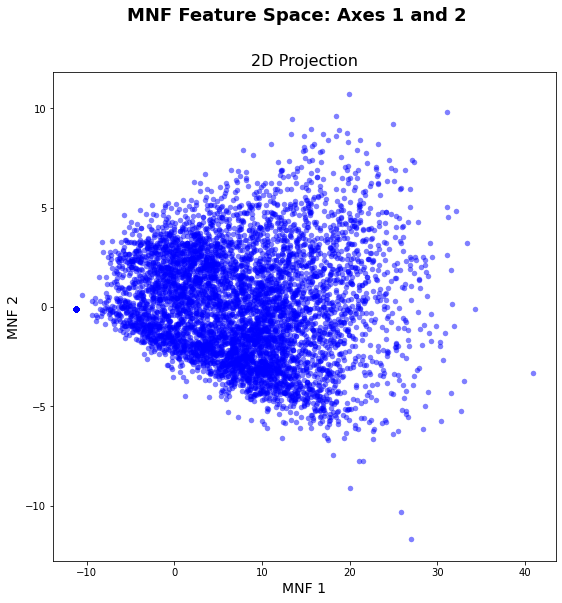

# Set feature_limit = 10000 to minimize computational time

vis_fs = FeatureSpace(path = 'C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/output/test_arrayImage.tiff', nodata = -9999,

feature_limit = 10000)

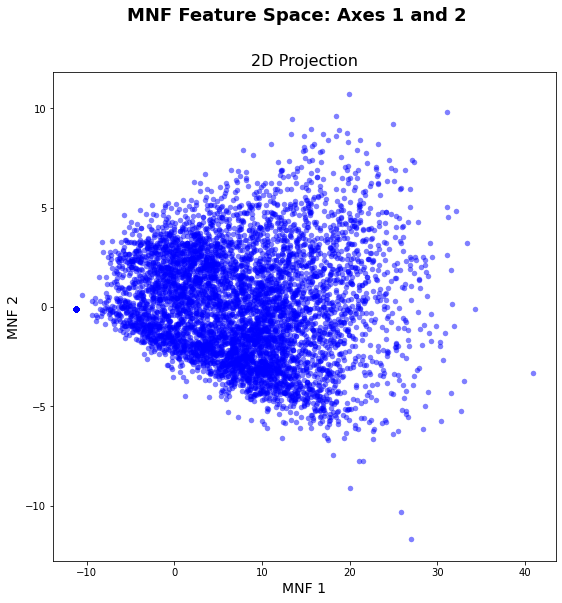

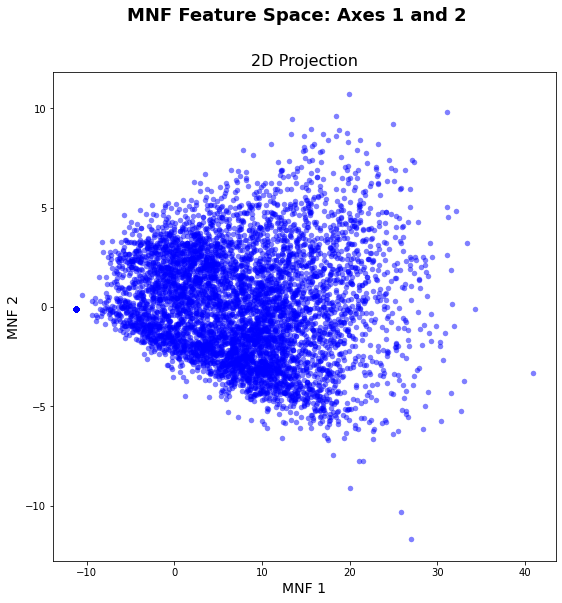

vis_fs.plot_feature_space()

%matplotlib inline

%config InlineBackend.close_figures=False

vis_fs.plot_feature_space()

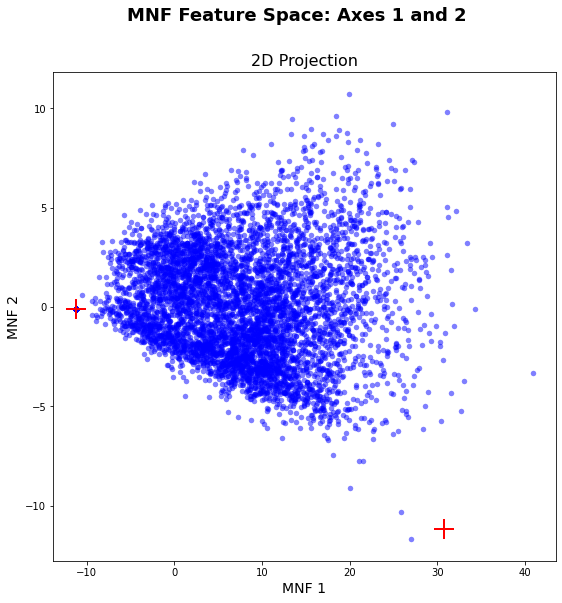

# Get MNF spectra from the HSI cube using the lat-lon coordinates

lat_lon_coords = pixel_to_xy(coords, gt = gt, wkt = wkt, dd = True)

mnf_spectra = spectra_at_xy(hsi_post_mnf.T, lat_lon_coords, gt, wkt, dd = True)

vis_fs.plot_spectral_points(mnf_spectra)

plt.show()

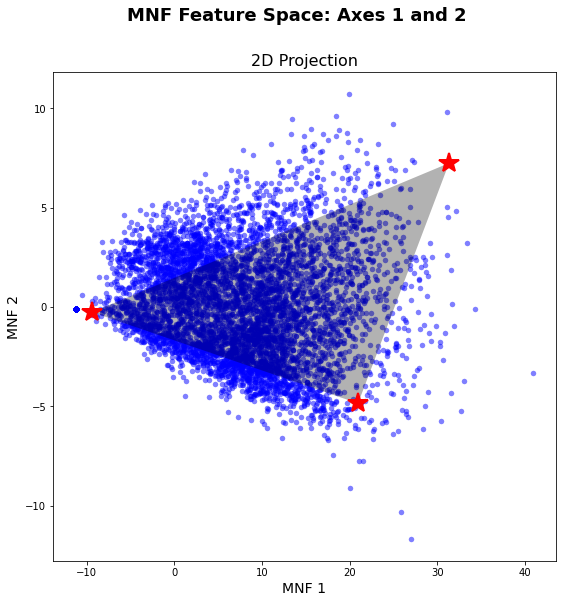

As you can see in the graphic above, the automatic endmember selection did not work perfectly. The only endmember that was selected well is the very dark pixel on the far left of the graph. This is not surprising, since we are only looking at vegetation surfaces, which is very similar in spectral behavior.

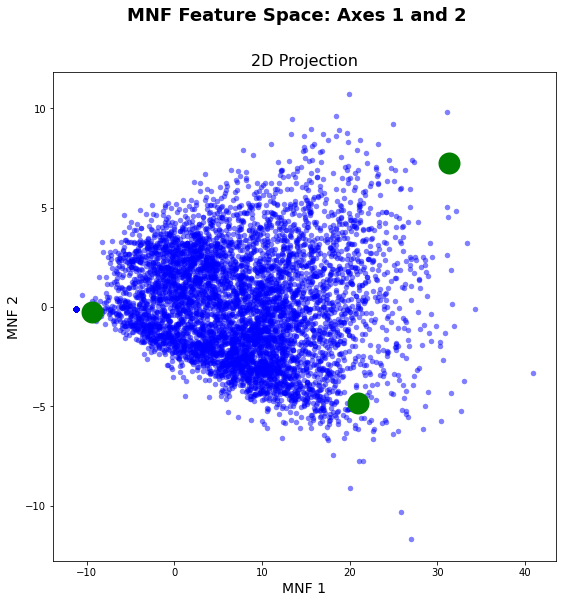

Nevertheless, we want to try to further improve the selection of endmembers. To this end, a set of potential endmembers was collected in SNAP for very bright surfaces, with high values in MNF 1 and medium values in MNF 2 and bright surfaces with high MNF 2 and medium MNF 1 values. The endmember selection is then improved with the Graham scan, using a convex hull to find the potential endmembers.

# load potential endmembers that were selected in QGIS

with open('C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/data/test_endember.json', 'r') as stream:

veg_pifs = json.load(stream)

targets = veg_pifs.copy()

targets{'bright': [[684576.6244, 9572808.7579]],

'very bright': [[684850.7084, 9572767.5228]]}# Flatten the dictionary to a list

endmember_coords = []

for target in targets.keys():

endmember_coords.extend(targets[target])

# A known shade endmember derived from SNAP

shade_em = (684595.0367,9572841.872)

endmember_coords.append(shade_em)

# Get the MNF spectra for each potential endmember

endmember_spectra = spectra_at_xy(hsi_post_mnf.T, endmember_coords, gt = gt, wkt = wkt)

# Find endmembers using Graham's scan

idx, hull = convex_hull_graham(endmember_spectra[...,0:2].tolist(), indices = True)plt.close()

vis_fs.plot_feature_space()

vis_fs.plot_spectral_points(hull, fmt = 'go')

plt.show()

# Get the coordinate pairs that appeared in the convex hull

hull_coords = [endmember_coords[i] for i in idx]

# A follow-up search by maximum volume

ems, em_coords = endmembers_by_maximum_volume(hsi_post_mnf.T, hull_coords,

shade_em, gt = gt, wkt = wkt)

plt.close()

vis_fs.plot_feature_space()

vis_fs.plot_spectral_points(ems, fmt='r*')

vis_fs.plot_2d_mixing_space(ems)

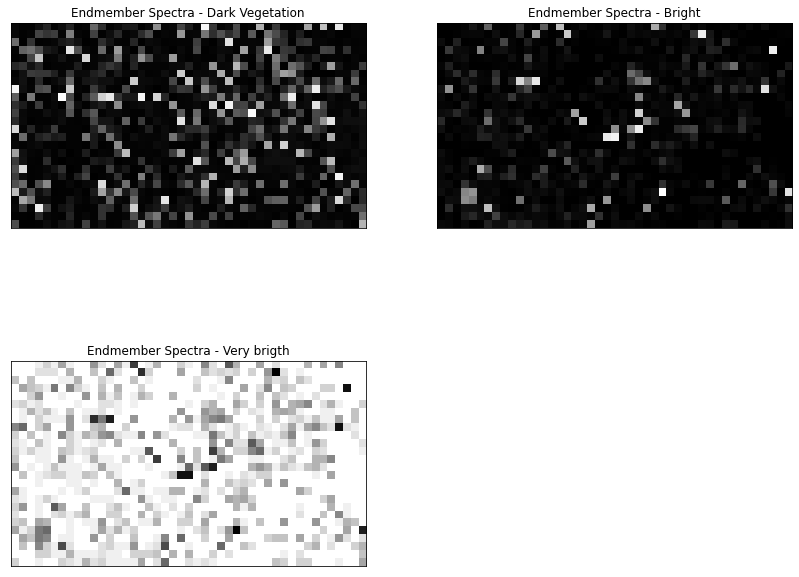

A Sentinel-2 Level 2A image for the 03.08.2020 was acquired from the Open Access Hub with a cloud coverage less than 5%. The image was then preprocessed in SNAP. Preprocessing included cloud masking with the Sen2Cor algorithm, subsetting the bands to RGB, NIR, Red Edge 1-3, and SWIR 1 & 2 bands, and resampling of all bands to 10 m.

# laod image

s2, gt, wkt = as_array('C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/data/S2_20200803.tiff')

s2.shape(7, 26, 45)# reduce noise and dimensionality

mnf = mnf_rotation(s2)

fcls_mapper = FCLSAbundanceMapper(s2, gt, wkt, processes = 6)# apply fully contraint LSMA

result = fcls_mapper.map_abundance(ems)result.shape(758, 535, 3)fig = plt.figure(figsize = (14, 14))

fig.subplots_adjust(hspace = -0.2)

endmember_labels = ['Dark Vegetation', 'Bright', 'Very brigth']

for i in range(0, 3):

ax = fig.add_subplot(221 + i, title = 'Endmember Spectra - %s' % endmember_labels[i])

ax.imshow(result.T[i,...], cmap = cm.gray)

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

#dump_raster(array_to_raster(result.T, gt, wkt), 'C:/Users/Henrike/Downloads/unmixing-master/unmixing-master/output/test_S2_result.tiff', nodata = -9999)As we can see in the plots above, the results are not perfect. However, this can be related to the fact that we only used a small subset of the UAV and the Sentinel-2 image. In addition, we didn't upscale the extracted spectra to the Sentinel-2 resolution, which could also affect the outcome. Furthermore, we only extracted 3 endmember from the image, but as we can see, there In a next step, it would be nice to go further and extract more than 3 endmember. This was yet not possible because than the abundance mapper needs some adjustments to allow more unknowns than equations in the LSMA model.